Turn your computer into an AI machine - AI videos with ComfyUI and NVIDIA Cosmos models

Introduction

Last time I wrote about AI videos with ComfyUI and HunyuanVideo model. Since a while there are so called World Models from NVIDIA: NVIDIA Cosmos. As usual normally these models are nothing for graphics cards with VRAM < 24 GByte which you would need at least (so only a RTX 4090 or RTX 5090 could deal with them). But again luckily the great ComfyUI community made it possible to run them on my RTX 4080 SUPER with 16 GByte VRAM. But even a RTX 4070 (TI) with 12 GByte VRAM should work thanks to automatic ComfyUI weight offloading!

The VAE (Variational Autoencoder) used is able to generate a 1280x704 sized video with 121 frames on a 12 GByte VRAM GPU. That’s way more memory efficient as the previously used HunyuanVideo VAE. But you’ve to stay with the 7B model to make it work with 12 or 16 GByte of VRAM.

Nevertheless there are a few things to note about that model that make it less appealing:

- Stay with 121 frames otherwise the model might start breaking.

- Lowest resolution is 704x704. If possible stay with a height of

704and for the with either use704or1280. - It’s important to use a few sentences for the prompts as you’ll see later in the example. Otherwise the result wont be very good.

- It’s slower than the

HunyuanVideomodel. Generating a1280x704video with 121 frames takes around 12 minutes on my RTX 4080 SUPER (it’s not that much slower than on a 4090). - You can turn of heating in winter 😄

The model is physics aware and was trained to generate high-quality videos from multimodal inputs like images, text, or video.

Prerequisites

I assume you’ve ComfyUI already installed and configured. If not please read my blog post about ComfyUI - private and locally hosted AI image generation or AI videos with ComfyUI and HunyuanVideo model

Also make sure that you have the latest ComfyUI version installed. You definitely need version 0.3.11 or higher. If you’ve ComfyUI-Manager installed as suggested in one of the previous blog posts it’s just a click on the Manager button on the top right, Update ComfyUI and then Restart.

NVIDIA Cosmos models installation

To make NVIDIA Cosmos work a few files need to be downloaded as usual.

Download oldt5_xxl_fp8_e4m3fn_scaled.safetensors text encoder

Open the cosmos_1.0_text_encoder_and_VAE_ComfyUI webpage and download the file oldt5_xxl_fp8_e4m3fn_scaled.safetensors. Put into $HOME/comfyui/ComfyUI/models/text_encoders/ directory. That’s about 4.6 GByte.

Note for FLUX.1 model users (and others maybe): oldt5_xxl is t5xxl version 1.0. FLUX.1 uses t5xxl version 1.1. So they’re not the same!

Download cosmos_cv8x8x8_1.0.safetensors VAE

Open the cosmos_cv8x8x8_1.0.safetensors website and download the file cosmos_cv8x8x8_1.0.safetensors. Put it into $HOME/comfyui/ComfyUI/models/vae directory. That’s around 211 MByte.

Download Cosmos-1_0-Diffusion-7B-Text2World.safetensors text to video model

Open the Cosmos-1_0-Diffusion-7B-Text2World.safetensors website. Download the file Cosmos-1_0-Diffusion-7B-Text2World.safetensors. Put it into $HOME/comfyui/ComfyUI/models/diffusion_models directory. That’s around 14.5 GByte.

Download Cosmos-1_0-Diffusion-7B-Video2World.safetensors video/image to video model

Open the Cosmos-1_0-Diffusion-7B-Video2World.safetensors website. Download the file Cosmos-1_0-Diffusion-7B-Video2World.safetensors. Put it into $HOME/comfyui/ComfyUI/models/diffusion_models directory. That’s around 14.5 GByte.

Download the text to video workflow

Download the text to video workflow (right click on the link with your mouse) and save it somewhere temporary.

Download the image to video workflow

Download the image to video workflow (right click on the link with your mouse) and save it somewhere temporary.

Test the models

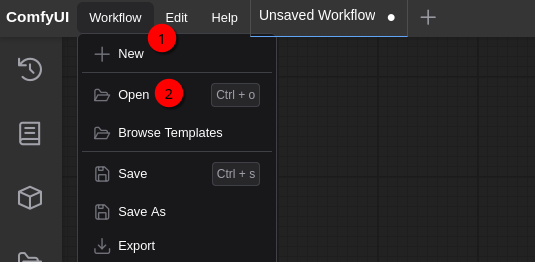

Loading the text to video workflow

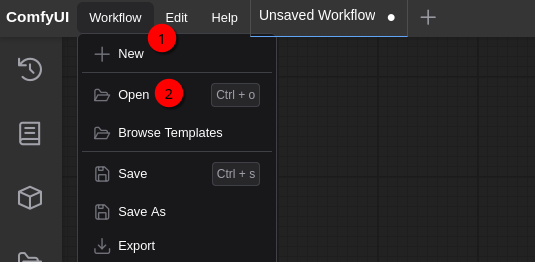

Select Workflow (1) and Open (2). Open the workflow file text_to_video_cosmos_7B.json. You should get something like this:

For the start I’ll keep everything as is. It’s always a good idea to start with something that works already. In 1, 2 and 3 you see the file names that were downloaded above. If you put them in the wrong directory or if the files are not found and you click Queue button later, you’ll get an error. Fix it accordingly if needed.

In contrast to the FLUX.1 model this model uses a positive (4) and negative (5) prompt (FLUX.1 only needs a positive one).

As mentioned in the introduction keeping the height at 704 in EmptyCosmosLatentVideo node (6) is important. Same for length which should be kept at 121. width should be 704 or 1280 for best results.

In KSampler node there is sampler_name set to res_multistep. This sampler can be used with every model supported by ComfyUI. The ComfyUI blog mentions that this sampler also gives good results for HunyuanVideo model I covered last time. So maybe give it a try.

So with everything setup lets press Queue (7) to generate the video. It takes a bit until the rendering starts as the video models need to be loaded into the graphics card VRAM first. If you used the same settings it takes around 12 minutes to generate the video on my 4080 SUPER. If you’ve a different graphics card the time varies of course. And for me the result looked like this:

Pretty impressive! So I wanted to try my own prompt now:

A slow motion drone camera flight over a sunflower field where the drone should start within some sunflowers and increasing heights over time. The drone itself is never visible. The viewpoint is always the drone camera. The drone camera should always look at the sunflower field. In the background in the sky there should be sunrise in the early morning and some birds flying around. Finally the drone should loose height and get back into the sunflower field.

Well, not bad but the model only respected half of my prompt I’d say. But it’s fine for the first try.

Next I wanted to check if it’s possible to improve the prompt with AI help. So I opened Open WebUI and pasted the prompt above. As model I had chosen the o1-mini model from OpenAI. Then I just added some introductory text:

Can you please extend the prompt below so that an AI video model follows the prompt more closely. I’m using the NVIDIA Cosmos 7B text 2 video model. Here is my current prompt: ….

The model gave me a pretty long prompt:

Create a slow-motion aerial drone video showcasing a vibrant sunflower field during the serene early morning hours. The scene begins with the drone positioned gently among a cluster of tall sunflowers, with the camera angle looking downward to capture the intricate details of the blossoms and green stems. Ensure that the drone remains entirely invisible throughout the footage, maintaining the illusion of a natural, unobstructed viewpoint. As the video progresses, the drone smoothly ascends, gradually increasing its altitude to reveal a sweeping panoramic view of the expansive sunflower field. The upward movement should be steady and graceful, emphasizing the vastness and beauty of the blooming sunflowers stretching towards the horizon. The camera should consistently focus on the sunflower field, keeping the flowers as the central element in every frame. In the background, depict a stunning early morning sunrise with a colorful sky painted in hues of orange, pink, and soft purple. The rising sun casts a warm, golden light over the field, creating gentle shadows and highlighting the vibrant yellows of the sunflowers. Incorporate a few graceful birds flying across the sky, adding a sense of life and movement to the tranquil setting. After reaching the peak of its ascent, the drone begins a smooth descent, gradually lowering its altitude to return to the heart of the sunflower field. The descent should mirror the initial ascent in its steadiness and grace, maintaining the focus on the sunflowers throughout the entire flight path. Ensure that the lighting remains consistent, capturing the early morning glow as the sun continues to rise.

I put that text back into my ComfyUI workflow into the positive prompt. The negative prompt I left empty. The result was okay but not that great. So I switched back to Open WebUI and asked the model to please also create a negative prompt:

Can you also create a negative prompt as the model also requires one?

And that’s what I got:

No visible drone, no drone parts, no shadows or reflections of the drone. Smooth and steady camera motion without abrupt or jerky movements. Exclude unrelated objects such as buildings, vehicles, people, or other vegetation. Avoid visual glitches, pixelation, compression artifacts, and distortions. No overly saturated or unnatural colors; maintain warm, vibrant palette. Remove distracting elements like power lines, fences, or litter. Prevent overexposure, excessive glare, and harsh shadows. No text overlays, logos, watermarks, or branding. Only include specified small flock of birds (sparrows or swallows) with natural flight patterns. Ensure high-resolution quality without blurriness or lack of detail. Exclude unrelated sound elements like traffic or machinery. Avoid repetitive visual patterns and extreme weather conditions. Maintain consistent, natural lighting and exclude special effects or animations.

Back in ComfyUI I added the negativ prompt accordingly. And now the result looked already quite nice:

So from my testing so far the negative prompt makes definitely a difference. The funny thing is that none of the prompts removed the drone as I requested. Also the sunflowers are currently not looking very realistic as with my very first test.

Hint: There is also a Cosmos-1.0 Prompt Upsampler Text2World available: Cosmos-1.0-Prompt-Upsampler-Text2World is a large language model (LLM) designed to transform original input prompts into more detailed and enriched versions. It improves the prompts by adding more details and maintaining a consistent description structure before they are used in a text-to-world model, which generally leads to higher quality outputs.. But I guess that one is to big for my 4080…

And here is a comparison with HunyuanVideo using the same positive prompt:

Create a video from a image description generated by Llama 3.2-vision:11b

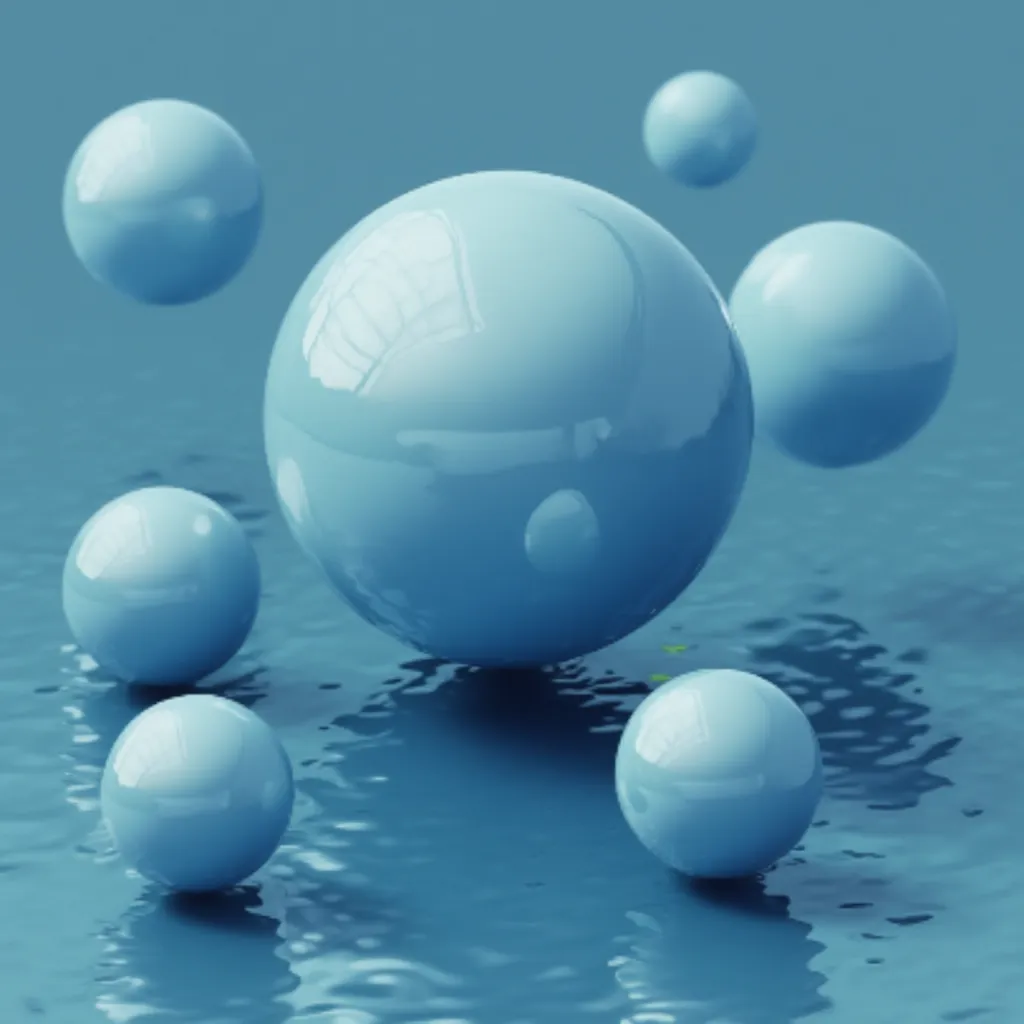

And now my usual test… 😉 In one of my previous blog posts I asked the Llama 3.2-vision:11b model to describe what’s on a book cover I provided. The answer was like this:

The picture features a number of blue spheres floating above water or another reflective surface, with some spheres casting shadows onto the surface below.

The largest sphere is centered and sits atop what appears to be a dark reflection that extends outward from its base, resembling a shadow. It reflects light in various directions, creating different shades of blue across its surface.

Surrounding this are four smaller spheres, arranged in a square formation with one in each corner. Each one casts a shadow onto the reflective surface below and also reflects light in various directions.

The image is framed by an off-white border that adds some contrast to the rest of the picture. The background color is a medium blue.

Again I was very interested to ask the NVIDIA Cosmos text to video model to create a video out of that description 😄 So after DALL-E, Juggernaut XL, FLUX.1-dev and HunyuanVideo lets see once again if that description not only works for images but also for a video. To describe what motion I want to have for the video I only added the following text at the end of the description above:

The smaller spheres should turn around the largest, centered sphere.

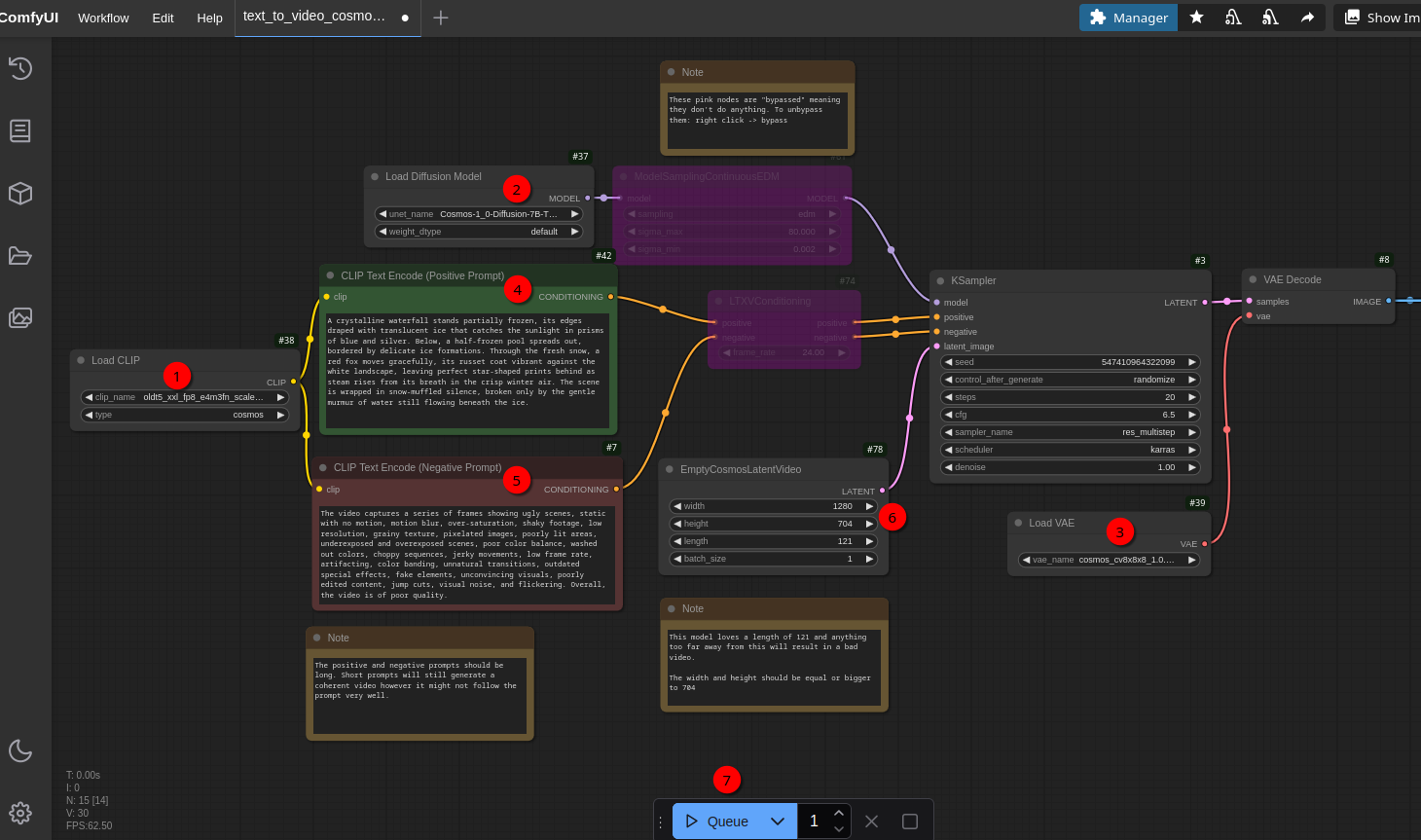

And that’s the result:

Well, that’s a bit disappointing 😉 HunyuanVideo did way better - even that the positive prompt is most probably pretty bad for video models in general… I guess I need to tune it a bit.

So lets do the same as above. I took the prompt and put it again into Open WebUI using Llama 3.2-vision:11b model this time and put my introductory text:

Can you please extend the prompt below so that an AI video model follows the prompt more closely. I’m using the NVIDIA Cosmos 7B text 2 video model. Here is my current prompt: …

That created a pretty long positive prompt:

Create a captivating video featuring a mesmerizing arrangement of blue spheres suspended above a serene water or reflective surface. The largest sphere, situated at the center, rests upon what appears to be a dark, rounded shadow that radiates outward from its base, creating a sense of depth and dimensionality. This central sphere reflects light in various directions, generating an array of blues and subtle shading across its surface. Surrounding this focal point are four smaller spheres, evenly spaced in a square formation with one sphere situated at each corner of the composition. Each smaller sphere casts a shadow onto the reflective surface below, adding to the overall sense of depth and visual interest. Like the central sphere, these smaller orbs reflect light in multiple directions, creating intricate patterns of blues and subtle shading. The entire scene is framed by an elegant off-white border that provides a clean and refined contrast to the rest of the image. The background color is a soothing medium blue, subtly blending with the surrounding elements to create a cohesive and harmonious visual experience. In terms of lighting, incorporate soft, gentle illumination that accentuates the reflections on the spheres’ surfaces without creating harsh shadows. Consider adding some subtle texture or ripples to the water or reflective surface below to enhance the overall sense of realism and engagement. Style-wise, aim for a dreamy, ethereal quality that evokes a sense of wonder and enchantment. Think ‘surrealism meets minimalist chic.’ Use gentle gradients, soft colors, and delicate shading to create an image that’s both captivating and calming.

I also asked for a negative prompt:

Can you also create a negative prompt as the model also requires one?

And this is what I got:

Overly sharp or defined edges on the spheres, instead opt for soft, rounded forms. Harsh shadows or unflattering lighting that creates unsightly dark spots or highlights. Distinctive textures or patterns on the water or reflective surface below, instead aim for a smooth, glass-like finish. Bright, saturated colors that overpower the overall mood, instead focus on subtle, muted tones. Unnatural or unrealistic reflections on the spheres’ surfaces, such as mirror-like duplicates of surrounding objects. Any visible artifacts or noise in the image, particularly around the off-white border or background.

And here is the result:

Well, HunyuanVideo did better without the AI optimized prompt. So what does HunyuanVideo produces when using the positive prompt above:

As you can see it’s not that easy. A lot to experiment with. The main problem is of course that it takes quite long to generate the videos. So there is basically only one try every 10 minutes or so…

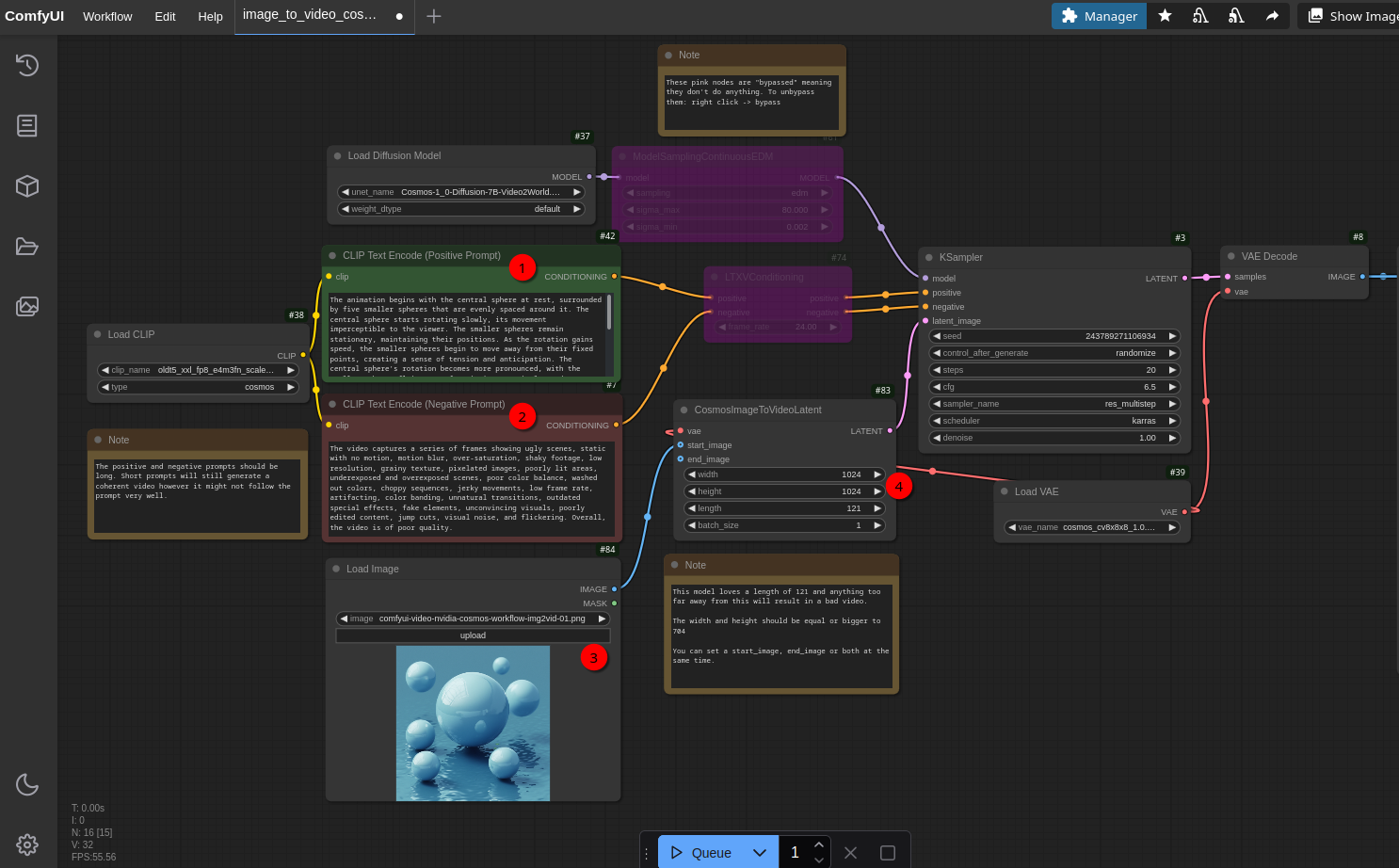

Loading the image to video workflow

One advantage about NVIDIA Cosmos is that you can also create videos from one or more images. So lets try that too.

Select Workflow (1) and Open (2). Open the workflow file image_to_video_cosmos_7B.json. You should get something like this:

My idea was to use an image that I generated earlier with ComfyUI and FLUX.1-dev from a book cover as a starting point (it’s already loaded in the screenshot above):

I used Open WebUI to upload the picture using the Llama 3.2-vision:11b model to create a positive prompt to describe the video motion. The prompt was:

The image shows a few smaller spheres around a central, bigger sphere. I want to use a AI video model to create a video out of that image. Please describe a motion in detail in around five to seven sentences were the central, bigger sphere starts rotation slowly while getting faster over time. While this sphere rotates faster, the smaller spheres around that one should be fly away over time because of the fast rotation of the bigger sphere.

This gave me a pretty long prompt that I condensed a bit:

The animation begins with the central sphere at rest, surrounded by five smaller spheres that are evenly spaced around it. The central sphere starts rotating slowly, its movement imperceptible to the viewer. The smaller spheres remain stationary, maintaining their positions. As the rotation gains speed, the smaller spheres begin to move away from their fixed points, creating a sense of tension and anticipation. The central sphere’s rotation becomes more pronounced, with the smaller spheres flying away from it in a seemingly random pattern. The animation reaches its climax as the spheres’ movements become more chaotic and unpredictable. The animation slows down, with the central sphere coming to a stop. The smaller spheres continue to move away from their original positions, but at a slower pace.

Since NVIDIA Cosmos is physics aware and the motion definitely requires some physics I was curious what the output will be. And that’s the result:

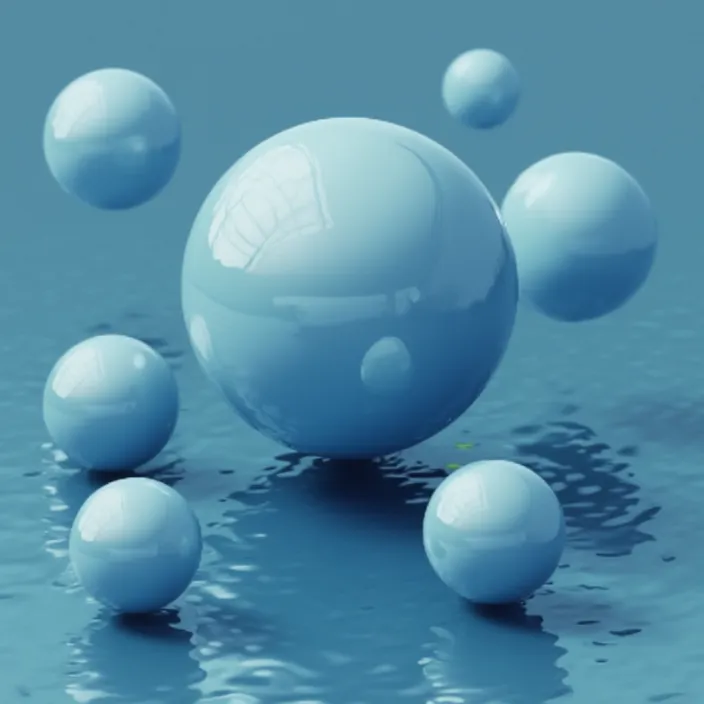

Well, not that bad for the very first try 😄 I was curios what I get if I just change the video size to 704x704 and keeping everything else as is. And that’s the result:

If it comes to motion of the smaller spheres that video is a bit better - at least in the first half of the video 😉 I guess I need to work on my “prompting” skills 😄 But in general it looks like that it’s a bit more complicated to get something useful out of image to video then on text to video if you don’t have too much experience.